Long-Term Federal Investments Improve Severe Weather Prediction

Moreover, earlier forecasts had warned of the probability of significant tornadoes in the central part of the state, following a day when another tornado ravaged parts of Shawnee, 30 miles to the east, so many people knew a tornado could be on the way.

Twenty-five years ago, people in the path of such devastating twisters would have had far less time to prepare, and the toll in lives and property--as terrible as it was for the people of Moore--almost certainly would have been greater.

"It is remarkable that only 24 people died in a city of tens of thousands," says Kelvin K. Droegemeier, director emeritus of the University of Oklahoma's Center for Analysis and Prediction of Storms (CAPS), vice president for research at the university, and vice chairman of the National Science Foundation's (NSF) National Science Board. "Experimental forecasts just 12 hours before the storm formed were remarkably accurate," he adds. "They were able to pinpoint where the storms would begin that day and when, as well as their type and motion."

That the outcome in Moore was far less tragic than it might have been is due in large part to a significant federal scientific investment in research and training in recent years, with the goal of dramatically improving the prediction of a severe tornado-producing weather.

By all accounts, it has made a huge difference.

NSF is involved primarily through its Atmospheric and Geospace Sciences Division, part of the agency's Directorate for Geosciences. NSF supports numerical weather prediction model development, field campaigns, instrument development, education and training, a diverse effort that draws upon the university research community, its Science and Technology Centers (STC), its Engineering Research Centers (ERC), and the National Center for Atmospheric Research (NCAR), also funded by NSF.

"The division supports world-class computational and observational facilities with the theoretical expertise covering the full range of atmospheric phenomena, and provides a high level of access to university and other national and international researchers and forecasters," says Michael Morgan, the division director.

NSF-supported research into severe thunderstorms and tornadoes, particularly model development and observation field campaigns, often are collaborations with other agencies, such as the National Oceanic and Atmospheric Administration (NOAA).

"Many of the advances in knowledge arising from NSF support regarding storm structure, evolution and predictability of convective mode are now used by severe weather forecasters on a daily basis worldwide," Morgan says.

Furthermore, NSF has launched a new program, Hazards, Science, Engineering, Education for Sustainability (SEES), designed to encourage physical scientists and engineers to work more closely with social and behavioral researchers with the idea of including the human element in understanding and responding to hazards, including severe weather prediction. The goals include finding better ways of communicating threats, understanding how people respond to warnings, and persuading them to trust the information.

"While weather is a physical science and an engineering challenge, it has a profound effect on individuals, families and communities," Droegemeier says. "We must never lose sight of its human impact."

Morgan agrees, saying one of the goals of the new initiative "is to prevent hazards from becoming disasters. We want to improve our nation's resilience. A great forecast that isn't acted upon is of no use to anyone. We have to get it right, and ensure it is well-communicated to the public."

Tornadoes can be especially frightening and potentially damaging. They occur in many parts of the world, but most frequently in the United States, causing about 70 deaths and 1,500 injuries annually, according to NOAA. They arise from thunderstorms, of which there are an estimated 100,000 each year in the United States, 10 percent of them severe, according to NOAA's National Severe Storms Laboratory (NSSL).

NSF's effort to better understand and more accurately predict tornadoes began on Feb. 1, 1989, when it announced it would fund CAPS at the University of Oklahoma, the only weather-related center among 11 created as part of a series of new NSF-supported STCs. Its mission was to take computer weather prediction--until then and for several decades the mainstay of daily forecasting--down to the scale of individual thunderstorms and other high-impact local weather, aiming to provide more accurate predictions and longer warning times.

The concept of establishing specialized science and technology centers was the brainchild of then-NSF Director Erich Bloch. "He believed the agency should use the center mode of research to focus on the most intellectually challenging problems across all scientific disciplines, even those regarded as nearly impossible to solve, with the hope that major societal benefits would result," Droegemeier says.

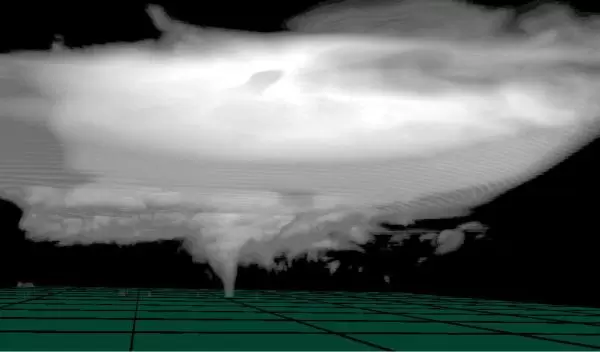

At the time, many weather researchers believed it was theoretically impossible to predict thunderstorms. "CAPS not only spawned a transformation in weather forecasting, but the center is poised to have the ability to numerically predict a tornado as much as an hour or more before it forms," Droegemeier says.

The challenge CAPS faced in 1989 was simple but profoundly difficult to address.

Doppler weather radar is the only observational tool that can provide information about wind speeds at fine scales, a critical element of storm prediction. Unfortunately, a Doppler radar, in addition to precipitation intensity, only measures wind speed along one dimension--a thin beam in the direction the radar is pointing. In addition, the radar provides only wind information where precipitation or other scattering particles are present.

But numerical forecast models require all three dimensions of the wind, plus pressure, temperature, hydrometeor types (rain, snow, hail, for example), solar and terrestrial radiation, soil composition, land cover characteristics, and numerous other variables and parameters in both the areas of precipitation and clear air.

"For CAPS, this was akin to extracting blood from a turnip," Droegemeier says. "With observations of only one wind component and precipitation intensity, the center needed to find ways to incorporate a wealth of additional information it needed to create a forecast model, and do so quickly while the situation at hand was unfolding."

But preliminary research completed in 1987 and published in 1991, "showed that extracting blood from the turnip indeed was possible, and numerous articles since then have refined and greatly improved the concept," Droegemeier says.

The work, along with a $4.5 billion restructuring of the National Weather Service, leading to 150 new NEXRAD Doppler radar systems, "raised hopes that it finally might be possible to develop effective computer models that actually could predict individual thunderstorms," he adds.

To that end, CAPS developed new prediction software, released in 1992, specifically designed for parallel computers, that is, those computers with tens or hundreds of thousands of processing units, rather than the one or two found in most home computers.

"It was and remains today, a complete system with tools that could process a wide variety of observations," Droegemeier says.

In 1994, CAPS and NOAA's NSSL and Storm Prediction Center, as part of the NSF and NOAA sponsored Verification of the Origins of Rotation in Tornadoes Experiment (VORTEX), began evaluating forecasts produced by the new system, which grew more sophisticated during the next two years.

Based upon an unusually accurate forecast of a severe hail storm in 1995, among other things, American Airlines (AA) funded a three-year, $1 million venture using the new system at major AA hub airports, prompting the project name "Hub-CAPS."

In 1995, with NSF funding, CAPS purchased a Cray J90 supercomputer, and also tapped into two NSF supercomputing centers, the National Center for Supercomputing Applications at the University of Illinois and the Pittsburgh Supercomputing Center, to run its massive experimental forecasts.

At the same time, the new technology also began to move into the private sector. In 1999, following the success of the AA project, Droegemeier, then director of CAPS, established Weather Decision Technologies, which today has more than 80 employees and offices in both the United States and abroad.

During the late 1990s, an effort supported by NOAA began to make high-resolution NEXRAD data available to users outside its National Weather Service, researchers and the private sector, for example, for experimental forecasts and for developing products.

In 1999, collaborating with, among others, the Internet2 Consortium, a community of leaders in research, academia, industry and government, and the NSSL, CAPS developed compressed, high-resolution NEXRAD data in real time which they transmitted over Abilene, a high-speed network created by Internet2 in the late 1990s. They called the project CRAFT, for Collaborative Radar Acquisition Field Test.

"Starting with a single NEXRAD radar at Fort Worth, Texas, CRAFT eventually encompassed dozens of radars around the country," Droegemeier says. "The data transmission was so fast--and reliable--that the National Weather Service used it to create a formal system to handle NEXRAD data, which remains in use today."

He adds: "It's worth noting that private companies--until CRAFT--could not access the high resolution NEXRAD data stream. Today, however, they are able to provide a broad array of products and services on devices from desktop computers to cellphones."

On May 3, 1999, a vicious tornado hit Moore with wind speeds observed by an NSF-sponsored mobile Doppler radar system to be the highest ever measured on Earth. At the time, CAPS was not producing real time forecasts, but later experiments using archived NEXRAD and other data showed that the new radar system they had developed could have predicted the actual Moore storm more than an hour in advance.

"This confirmed the original vision of NSF's Science and Technology Centers in dramatic fashion," Droegemeier says.

In 2004, NOAA formalized the experimental evaluation of fine-scale weather forecasts as the Hazardous Weather Testbed (HWT), a joint activity between its storm prediction center and its severe storms laboratory. Numerous other researchers and operational personnel from across the country continue to visit the HWT every year for its spring forecast experiment. "This is the proving ground for research before it becomes operational," Droegemeier says.

Today, CAPS, using NSF's supercomputing resources, "produces 30-member ensemble forecasts at 4 km grid spacing and a single 1 km forecast each day, for the entire United States," Droegemeier says. "Truly, the system is a crown jewel in NOAA's experimental prediction portfolio, and a pioneer in new forecasting concepts."

The NEXRAD radar system, with its high capacity to detect severe storms, also has an enhanced ability to identify types of precipitation, the result of a recent upgrade.

Furthermore, NOAA's severe storms laboratory has begun to use phased array technology, which has the ability to simultaneously track weather--with a very rapid data update time, some ten times faster than NEXRAD--as well as aircraft.

"Eventually, this new technology hopefully will replace both NEXRAD and air traffic radars, resulting in billions in savings, and providing a truly integrative weather/aviation capability," Droegemeier says. "Moreover, the rapid update time will enhance storm-resolving models for rapidly evolving weather events."

In 2003, NSF funded the ERC for Collaborative Adaptive Sensing of the Atmosphere (CASA), based at the University of Massachusetts Amherst, with partners at the University of Oklahoma, Colorado State University, the University of Puerto Rico at Mayaguez, and several private companies.

Unlike current conventional radar systems, which typically generate high-bandwidth data compressed from 150 megabits per second to roughly five megabits per second, CASA uses an ultra-high-bandwidth network to transmit uncompressed radar data to a central computer cluster.

When the data are uncompressed, the information is likely to be more accurate, especially with tornadoes, where exact wind measurement is especially important. Information from the new system comes out faster, has higher resolution and is more geographically specific.

The researchers accomplish this with new detection algorithms that operate directly on uncompressed, high-bandwidth radar data, rather than the compressed data of conventional systems. After-the-fact results have shown it is possible, in some cases, to numerically predict individual tornadoes with timing and location similar to those actually observed, Droegemeier says. In 2003, NSF announced a five-year, $11.3 million initiative under its Information Technology Research program, led by the University of Oklahoma and involving nine other partners, to study a new paradigm for weather prediction: Data systems, model and cyberinfrastructure that adapt automatically to the weather situation of the moment, rather than on a fixed schedule, as had been the case for decades.

"This research showed the value and possibility of dynamic adaptation," Droegemeier says. "In fact, work now underway will use this very concept for the prediction of tornadoes."

Yet, despite the breathtaking advances of the past 25 years in severe weather prediction, much work remains.

"The most vexing challenge: To use computer models to successfully predict these tornadoes, with a high degree of certainty, an hour or more in advance," Droegemeier says. "Our work will not be done until we achieve the goal of zero deaths."