Computer Algorithms Reveal How the Brain Processes and Perceives Sound in Noisy Environments

Every day the human brain encounters a cacophony of sounds, often all simultaneously competing for its attention.

In the cafeteria, at the mall, in the street, at the playground, even at home, the noises are everywhere. People talk. Music plays. Babies cry. Cars drive by with their windows open and radios on. Fire engine sirens scream. Dogs bark. Lawn mowers roar. The list is endless.

With all of this going on, how does the brain sort out what is important, and what it needs to hear?

That's what Mounya Elhilali is trying to find out.

"We want to understand how the brain processes and perceives sounds in noisy environments, when there are lots of background sounds," says Elhilali, an assistant professor of electrical and computer engineering at the center for language and speech processing at the Johns Hopkins University. "The ultimate goal is to learn how the brain adapts to different acoustic environments."

Elhilali, who works at the interface of neuroscience and engineering, hopes to use what she learns from basic research to design better products that will enhance communication.

Two processes, known as "bottom-up" and "top down," occur in the brain when it is exposed to a wide range of sounds, Elhilali says. Hearing many different sounds in a room is "bottom-up," that is, "driven by the sounds around you," she says, while zeroing in on a particular sound, such as a conversation, is "top-down," that is, "controlled by your state of mind," she says, adding: "We are trying to understand the interaction between these two processes."

She and her research colleagues will take this information and construct mathematical models--computer algorithms--of how the brain perceives sounds in a complex environment "and build better machines--computers that can hear like a human brain can hear," she says. "We are trying to build a computer brain that can process sounds in noisy environments like the human brain, so you can talk to the computer and it can understand what you are saying."

Ultimately, the research has the potential to benefit numerous engineering and communication applications, in cell phones, for example, within industry, on the battlefield, in designing new and better hearing aids, and in improving telephone automated systems that many consumers currently find both annoying and frustrating.

"The knowledge generated from our work should be able to target those robotic systems," she says. "The reason they don't work now is because they were not designed to deal with complex and unknown environments. They were designed to work in a controlled laboratory, so the system just falls apart."

The research ultimately aims to improve communication among humans or between humans and machines. "We are hoping to learn from the biology to design better systems," she says. "Performance of hearing systems and speech technologies can benefit greatly from a deeper appreciation and knowledge of how the brain processes and perceives sounds."

Elhilali is conducting her research under a National Science Foundation (NSF) Faculty Early Career Development (CAREER) award, which she received in 2009 as part of NSF's American Recovery and Reinvestment Act. The award supports junior faculty who exemplify the role of teacher-scholars through outstanding research, excellent education, and the integration of education and research within the context of the mission of their organization. NSF is funding her work with about $550,000 over five years.

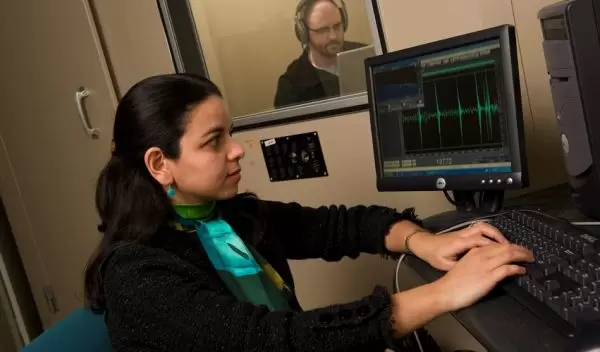

Typically, "laboratory sounds are stripped down versions of the sounds that we hear in everyday life," Elhilali says. "To better understand the complex sound environments that surround us, we need to test our listeners in a variety of conditions. We bring people to a sound booth and play them various sounds that simulate different listening environments. We ask them to pay attention to different sound elements and report back what they hear, or simply tell us if they hear something unusual."

For example, when listening to a conversation among a group of males, the introduction of a female voice, or a melody, will attract attention, and listeners often indicate this as an expected event, she says. "We hope to learn more about how the listeners' different expectations bias how they hear sounds and how they interpret the world surrounding them," she says.

Eventually, researchers intend to directly measure brain activity using electroencephalography (EEG), a device that detects electrical activity along the scalp, "and tells us how the brain is processing sounds," she says. "Different parts of the brain communicate by sending electrical signals, and we are hoping to see how patterns of activity in the brain change when we change the listening environment from controlled to complex."

With the education component of the grant, Elhilali is developing an interdisciplinary curriculum aimed at encouraging undergraduates to explore both science and engineering and to recognize that the two fields complement one another. "I want students to realize that they can translate their scientific knowledge into practical engineering experience," she says.