Complex geometric models made simple

Researchers at Carnegie Mellon University have developed an efficient new way to quickly analyze complex geometric models by borrowing a computational approach that made photorealistic animated films possible. The new method is an advance over a method of dividing shapes into meshes.

Rapid improvements in sensor technology have generated vast amounts of new geometric information, from scans of ancient architectural sites to human internal organs. But analyzing that mountain of data, whether it's determining if a building is structurally sound or how oxygen flows through lungs, has become a computational chokepoint.

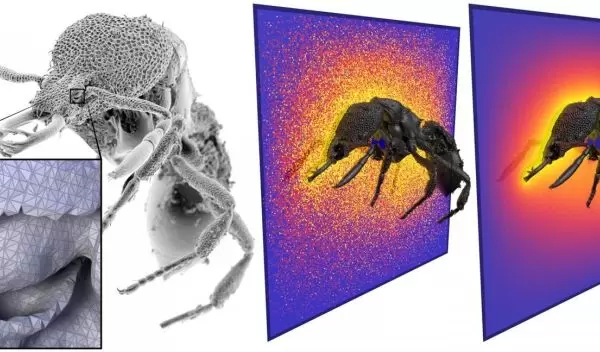

Now National Science Foundation-funded scientists are taming that data monster by simulating how particles and heat move through or within a complex shape. The process eliminates the need to painstakingly divide shapes into meshes -- collections of small geometric elements that can be computationally analyzed.

Building meshes is a minefield of possible errors, researchers say. If one element is distorted, it can throw off the entire computation.

Meshing was also a problem for filmmakers trying to create photorealistic animations in the 1990s. Not only was meshing laborious and slow, but the results didn't look natural. Their solution was to add randomness to the process by simulating light rays that could bounce around a scene. The result was beautifully realistic lighting, rather than flat-looking surfaces and blocky shadows.

Likewise, researchers have now embraced randomness in geometric analysis. They aren't bouncing light rays through structures, but are using modern methods to image how particles, fluids and heat randomly interact and move through space.

The scientists' work revives a little-used "walk on spheres" algorithm that makes it possible to simulate a particle's long, random walk through a space without determining each twist and turn.

While it might take a day just to build a mesh of a geometric space, the new approach allows users to get a preview of the solution in just a few seconds. This preview can then be refined by taking more and more random walks.

The researchers will present their new method at the SIGGRAPH 2020 Conference on Computer Graphics and Interactive Techniques, to be held virtually this month.