The Office of Legislative and Public Affairs is the principal point of contact between Congress and the U.S. National Science Foundation.

The office's congressional team:

- Coordinates requests for information.

- Coordinates NSF officials' appearances at congressional hearings.

- Ensures timely responses to congressionally mandated reports and correspondence.

- Arranges meetings with Members of Congress and their staff.

- Arranges site visits to NSF-supported facilities.

Events

NSF regularly hosts all-interested congressional staff briefings to highlight the breadth and impact of NSF investments in science and engineering and the STEM workforce of today and tomorrow.

National Artificial Intelligence Research Resource Demo

May 22, 2024

Sponsored by the House Artificial Intelligence Caucus

Along with the Department of Energy, NASA and the National Institutes of Health, NSF provided hands-on demonstrations of the kind of groundbreaking AI innovation that is being made possible through the NAIRR Pilot and could be fueled through a fully realized NAIRR.

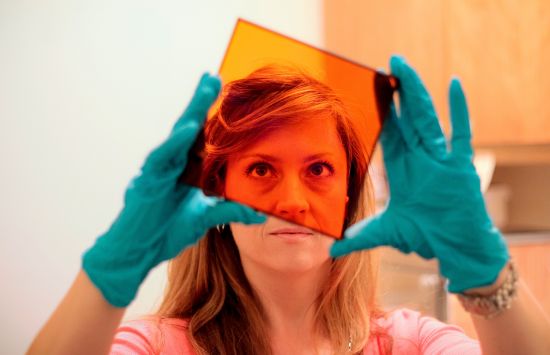

NSF Quantum Research Showcase

April 30, 2024

Sponsored by the House Committee on Science, Space and Technology

This event brought 37 researchers, students and educators from around the country to discuss ongoing research, education and workforce initiatives through demonstrations and prototypes.

NSF Artificial Intelligence Research Institutes Showcase

Sept. 19, 2023

Sponsored by the Senate AI Caucus

Joined by federal and industry partners, NSF celebrated and shared the work of its 25 National AI Research Institutes.

About NSF

Agency overview

Since 1950, NSF has supported science and engineering in all 50 states and U.S. territories.

Impacts

Since 1950, NSF has invested in ideas and innovations that have shaped the modern world.

Focus areas

NSF accelerates new technologies and big ideas — from biology to emerging technologies.

'CHIPS and Science' and NSF

The "CHIPS and Science Act of 2022" investments in NSF will help the United States remain a global leader in innovation.

Fiscal Year 2026 Budget Request

As released on May 2, 2025, the President’s FY2026 Discretionary Budget Request of $3.9 billion for the U.S. National Science Foundation (NSF) reflects a strategic alignment of resources in a constrained fiscal environment in which NSF prioritizes investments that can have the greatest national impact.

NSF in your state

State fact sheets

These brief summaries include the total amount of awards to institutions within a state, some of the state's institutions receiving the awards and examples of projects currently funded in the state.

Award Abstracts Database

View information about research projects that NSF has funded since 1989, including abstracts that describe the research and names of principal investigators and their institutions. The database includes both completed and in-process research.

NSF by the Numbers

This dashboard provides statistical and funding information for awards funded, institutions funded, funding rates, proposals evaluated and award obligations by Fiscal Year.

Hearings: Testimonies and summaries

118th Congress (2023–24)

Date: Thursday, May 23, 2024.

Time: 9:30 a.m.

Location: Dirksen Senate Office Building 192.

Hearing: A Review of the President's Fiscal Year 2025 Budget Request for the National Aeronautics and Space Administration and for the National Science Foundation.

Committee: Senate Committee on Appropriations; Subcommittee on Commerce, Justice, Science, and Related Agencies.

Witnesses: The Honorable Sethuraman Panchanathan (PDF, 194.33 KB), Director, NSF; The Honorable Bill Nelson, Administrator, NASA.

Webcast: https://www.appropriations.senate.gov/hearings/a-review-of-the-presidents-fiscal-year-2025-budget-request-for-the-national-aeronautics-and-space-administration-and-for-the-national-science-foundation.

Date: Thursday, May 16, 2024.

Time: 10:00 a.m.

Location: 2318 Rayburn House Office Building.

Hearing: Oversight and Examination of the National Science Foundation's Priorities for 2025 and Beyond.

Committee: House Committee on Science, Space, and Technology; Subcommittee on Research and Technology.

Witnesses: The Honorable Sethuraman Panchanathan (PDF, 194.28 KB) , director, NSF; Dan Reed, former Chair, National Science Board.

Webcast: https://science.house.gov/2024/5/research-and-technology-subcommittee-hearing-oversight-and-examination-of-the-national-science-foundation-s-priorities-for-2025-and-beyond.

Date: Thursday, Feb. 15, 2024.

Time: 10:00 a.m.

Location: 2318 Rayburn House Office Building.

Hearing: Examining Federal Science Agency Actions to Secure the U.S. Science and Technology Enterprise.

Committee: House Committee on Science, Space, and Technology.

Witnesses: Rebecca Keiser (PDF, 260.02 KB) , Chief of Research Security Strategy and Policy, NSF; The Honorable Arati Prabhakar, Director, White House Office of Science and Technology Policy; The Honorable Geri Richmond, Under Secretary for Science and Innovation, Department of Energy; Dr. Michael Lauer, Deputy Director for Extramural Research, National Institutes of Health.

Webcast: https://science.house.gov/2024/2/full-committee-hearing-examining-federal-science-agency-actions-to-secure-the-u-s-science-and-technology-enterprise.

Date: Tuesday, Feb. 6, 2024.

Time: 10:00 a.m.

Location: 2318 Rayburn House Office Building.

Hearing: Federal Science Agencies and the Promise of AI in Driving Scientific Discoveries

Committee: House Committee on Science, Space, and Technology; Subcommittee on Research and Technology; Subcommittee on Energy.

Witnesses: Tess DeBlanc Knowles (PDF, 197.84 KB) , Special Assistant to the Director for Artificial Intelligence, NSF; Georgia Tourassi, Associate Laboratory Director, Computing and Computational Sciences, Oak Ridge National Laboratory; Chaouki Abdallah, Executive Vice President for Research, Georgia Institute of Technology; Louay Chamra, Dean, School of Engineering and Computer Science, Oakland University; Jack Clark, co-Founder and Head of Policy, Anthropic.

Webcast: https://science.house.gov/2024/2/joint-research-technology-and-energy-subcommittee-hearing-federal-science-agencies-and-the-promise-of-ai-in-driving-scientific-discoveries.

Date: Tuesday, Jan. 30, 2024.

Time: 2:00 p.m.

Location: 2318 Rayburn House Office Building.

Hearing: From Risk to Resilience: Reauthorizing the Earthquake and Windstorm Hazards Reduction Programs.

Committee: House Committee on Science, Space, and Technology; Subcommittee on Research and Technology.

Witnesses: Susan Margulies (PDF, 211.17 KB) , Assistant Director for Engineering, NSF; Jason Averill, Deputy Director of the Engineering Laboratory, National Institute of Standards and Technology; Edward Laatsch, Director, Safety, Planning, and Building Science Division, Risk Analysis, Planning and Information Directorate, Federal Emergency Management Agency; Gavin Hayes, Earthquake Hazards Program Coordinator, U.S. Geological Survey.

Webcast: https://science.house.gov/2024/1/research-and-technology-subcommittee-hearing-from-risk-to-resilience-reauthorizing-the-earthquake-and-windstorm-hazards-reduction-programs.

Date: Wednesday, Oct. 4, 2023.

Time: 2:00 p.m.

Location: Russell Senate Office Building 253.

Hearing: CHIPS and Science Implementation and Oversight.

Committee: Senate Committee on Commerce, Science, and Transportation.

Witnesses: The Honorable Sethuraman Panchanathan (PDF, 238.93 KB) , Director, NSF; The Honorable Gina Raimondo, Secretary, U.S. Department of Commerce.

Webcast: https://www.commerce.senate.gov/2023/10/chips-and-science-implementation-and-oversight.

Date: Thursday, Sept. 14, 2023.

Time: 10:00 a.m.

Location: 2154 Rayburn House Office Building.

Hearing: Oversight of Federal Agencies' Post-Pandemic Telework Policies.

Committee: House Committee on Oversight and Accountability; Subcommittee on Government Operations and the Federal Workforce.

Witnesses: Karen Marrongelle (PDF, 144.88 KB) , Chief Operating Officer, NSF; Robert Gibbs, Associate Administrator for the Mission Support Directorate, NASA; Dan Dorman, Executive Director for Operation, Nuclear Regulatory Commission; Randolph "Tex" Alles, Deputy Under Secretary for Management and Senior Official Performing the Duties of the Under Secretary for Management, Department of Homeland Security.

Webcast: https://oversight.house.gov/hearing/oversight-of-federal-agencies-post-pandemic-telework-policies/.

Date: Wednesday, April 26, 2023.

Time: 10:00 a.m.

Location: 2318 Rayburn House Office Building.

Hearing: An Overview of the National Science Foundation Budget Proposal for Fiscal Year 2024.

Committee: House Committee on Science, Space, and Technology.

Witnesses: The Honorable Sethuraman Panchanathan (PDF, 253.3 KB) , Director, NSF; Dan Reed, Chair, National Science Board.

Webcast: https://science.house.gov/2023/4/full-committee-hearing-an-overview-of-the-national-science-foundation-budget-proposal-for-fiscal-year-2024.

Date: Wednesday, April 19, 2023.

Time: 9:30 a.m.

Location: H-309, the Capitol.

Hearing: Fiscal Year 2024 Request for The National Science Foundation.

Committee: House Committee on Appropriations; Subcommittee on Commerce, Justice, Science, and Related Agencies.

Witnesses: The Honorable Sethuraman Panchanathan (PDF, 254.36 KB) , Director, NSF.

Webcast: https://democrats-appropriations.house.gov/events/hearings/budget-hearing-fiscal-year-2024-request-for-the-national-science-foundation

Date: Tuesday, April 18, 2023.

Time: 2:30 p.m.

Location: Dirksen Senate Office Building 192.

Hearing: A Review of the President's Fiscal Year 2024 Funding Request for the National Aeronautics and Space Administration and for the National Science Foundation.

Committee: Senate Committee on Appropriations; Subcommittee on Commerce, Justice, Science, and Related Agencies.

Witnesses: The Honorable Sethuraman Panchanathan (PDF, 254.45 KB) , Director, NSF; The Honorable Bill Nelson, Administrator, NASA.

Webcast: https://www.appropriations.senate.gov/hearings/a-review-of-the-presidents-fiscal-year-2024-funding-request-for-the-national-aeronautics-and-space-administration-and-for-the-national-science-foundation.

Date: Wednesday, March 8, 2023.

Time: 10:00 a.m.

Location: 2318 Rayburn House Office Building.

Hearing: Innovation Through Collaboration: The Department of Energy's Role in the U.S. Research Ecosystem.

Committee: House Committee on Science, Space, and Technology.

Witnesses: Sean L. Jones (PDF, 187.76 KB) , Assistant Director, Directorate for Mathematical and Physical Sciences, NSF; Harriet Kung, Deputy Director for Science Programs in the Office of Science, the U.S. Department of Energy; James L. Reuter, Associate Administrator for the Space Technology Mission Directorate, NASA; Michael C. Morgan, Assistant Secretary of Commerce for Environmental Observation and Prediction, the National Oceanic and Atmospheric Administration.

Webcast: https://science.house.gov/2023/3/full-committee-hearing.

117th Congress (2021–22)

Date: Tuesday, Dec. 6, 2022.

Time: 1:00 p.m.

Location: Online via videoconferencing and 2318 Rayburn House Office Building.

Hearing: Building a Safer Antarctic Research Environment.

Committee: House Committee on Science, Space, and Technology; Subcommittee on Research and Technology.

Witnesses: Karen Marrongelle, Chief Operating Officer, National Science Foundation; Kathleen Naeher, Chief Operating Officer of the Civil Group, Leidos; Angela V. Olinto, Dean of the Physical Sciences Division and Albert A. Michelson Distinguished Service Professor, University of Chicago; Anne Kelly, Deputy Director, The Nature Conservancy Alaska Chapter.

Webcast: https://science.house.gov/hearings/building-a-safer-antarctic-research-environment.

Additional resources: Learn about steps NSF is taking to prevent sexual assault and harassment.

Date: Wednesday, May 11, 2022.

Time: 2:00 p.m.

Location: Online via videoconferencing.

Hearing: Fiscal Year 2023 Budget Request for the National Science Foundation.

Witnesses: The Honorable Sethuraman Panchanathan (PDF, 205.76 KB) , Director, NSF.

Webcast: https://appropriations.house.gov/events/hearings/fiscal-year-2023-budget-request-national-science-foundation

Date: Tuesday, May 3, 2022.

Time: 10:00 a.m.

Location: Dirksen Senate Office Building SD-192.

Hearing: A Review of the President's Fiscal Year 2023 Funding Request for the National Aeronautics and Space Administration and the National Science Foundation.

Witnesses: The Honorable Sethuraman Panchanathan (PDF, 210.4 KB) , Director, NSF; The Honorable Bill Nelson, Administrator, NASA.

Webcast: https://www.appropriations.senate.gov/hearings/a-review-of-the-presidents-fiscal-year-2023-funding-request-for-the-national-aeronautics-and-space-administration-and-the-national-science-foundation.

Date: Wednesday, April 6, 2022.

Time: 10:00 a.m.

Location: Online via videoconferencing and 2318 Rayburn House Office Building.

Hearing: SBIR Turns 40: Evaluating Support for Small Business Innovation.

Committee: House Committee on Science, Space, and Technology; Subcommittee on Research and Technology.

Witnesses: Ben Schrag (PDF, 270.76 KB) , SBIR/STTR Program Director and Policy Liaison, Directorate for Technology, Innovation, and Partnerships, NSF.

Webcast: https://science.house.gov/hearings?ID=B757DFF9-8EC0-4054-8598-4B54460D2E02.

Date: Wednesday, Nov. 10, 2021.

Time: 10:00 a.m.

Location: Online via videoconferencing.

Hearing: Weathering the Storm: Reauthorizing the National Wind Impact Reduction Program.

Committee: House Committee on Science, Space, and Technology; Subcommittee on Research and Technology.

Witnesses: Linda Blevins (PDF, 253.58 KB) , Deputy Assistant Director, Directorate for Engineering, NSF.

Webcast: https://science.house.gov/hearings?ID=6B4DE5E0-7CEB-44FB-A692-E8BF5DA09620.

Date: Wednesday, April 28, 2021.

Time: 10:00 a.m.

Location: Online via videoconferencing.

Hearing: National Science Foundation: Advancing Research for the Future of U.S. Innovation.

Committee: House Committee on Science, Space, and Technology; Subcommittee on Research and Technology.

Witnesses: The Honorable Sethuraman Panchanathan, Director, NSF; Ellen Ochoa, Chair, National Science Board.

Webcast: https://science.house.gov/hearings?ID=AA1DF3D1-B4A3-4DD9-B5AC-B91DFC714AB5

Date: Wednesday, April 14, 2021.

Time: 10:00 a.m.

Location: Online via videoconferencing.

Hearing: The National Science Foundation's Fiscal Year 2022 Budget Request.

Committee: House Committee on Appropriations; Subcommittee on Commerce, Justice, Science, and Related Agencies.

Witnesses: The Honorable Sethuraman Panchanathan, Director, NSF.

Webcast: https://appropriations.house.gov/events/hearings/national-science-foundations-fiscal-year-2022-budget-request.

Date: Tuesday, April 13, 2021.

Time: 2:00 p.m.

Location: Dirksen Senate Office Building SD-106.

Hearing: A Review of the President's Fiscal Year 2022 Funding Request for the National Science Foundation and Securing U.S. Competitiveness.

Committee: Senate Committee on Appropriations; Subcommittee on Commerce, Justice, Science, and Related Agencies.

Witnesses: The Honorable Sethuraman Panchanathan, Director, NSF.

Webcast: https://www.appropriations.senate.gov/a-review-of-the-presidents-fiscal-year-2022-funding-request-for-the-national-science-foundation-and-securing-us-competitiveness.

116th Congress (2019–20)

Date: Wednesday, Feb. 5, 2020.

Time: 4:00 p.m.

Location: 2318 Rayburn House Office Building.

Hearing: America's Seed Fund: A Review of SBIR and STTR.

Committee: House Committee on Science, Space, and Technology; Subcommittee on Research and Technology.

Witnesses: Dawn Tilbury, Assistant Director, Directorate of Engineering; National Science Foundation; Maryann Feldman, S.K. Heninger Distinguished Professor of Public Policy, Department of Public Policy; Adjunct Professor of Finance, Kenan-Flagler Business School; Faculty Director, CREATE, Kenan Institute of Private Enterprise; The University of North Carolina at Chapel Hill; Nicholas Cucinelli, Chief Executive Officer, Endectra LLC; Johnny Park, Chief Executive Officer, Wabash Heartland Innovation Network.

Webcast: https://science.house.gov/hearings?ID=A94F8D23-36C8-4EFC-87BB-9FA629AA8306.

Date: Wednesday, Jan. 15, 2020.

Time: 10:00 a.m.

Location: 216 Hart Senate Office Building.

Hearing: Industries of the Future.

Committee: Senate Committee on Commerce, Science, and Transportation.

Witnesses: The Honorable Walter Copan, Director, National Institute of Standards and Technology, Department of Commerce; Under Secretary of Commerce for Standards and Technology; The Honorable France Córdova, Director, NSF; The Honorable Michael Kratsios, Chief Technology Officer of the United States, Office of Science and Technology Policy; The Honorable Michael O'Rielly, Commissioner, Federal Communications Commission; The Honorable Jessica Rosenworcel, Commissioner, Federal Communications Commission.

Webcast: https://www.commerce.senate.gov/2020/1/industries-of-the-future.

Date: Tuesday, Nov. 19, 2019.

Time: 10:00 a.m.

Location: 342 Dirksen Senate Office Building.

Hearing: Securing the U.S. Research Enterprise from China's Talent Recruitment Plans.

Committee: Senate Committee on Homeland Security and Governmental Affairs; Permanent Subcommittee on Investigations.

Witnesses: John Brown, Assistant Director, Counterintelligence Division, FBI, U.S. Department of Justice; Rebecca L. Keiser, Head, Office of International Science and Engineering, NSF; Dr. Michael S. Lauer, Deputy Director for Extramural Research, National Institutes of Health, U.S. Department of Health and Human Services; The Honorable Christopher Fall, Director, Office of Science, U.S. Department of Energy; Edward J. Ramotowski, Deputy Assistant Secretary for Visa Services, Bureau of Consular Affairs, U.S. Department of State.

Webcast: https://www.hsgac.senate.gov/subcommittees/investigations/hearings/securing-the-us-research-enterprise-from-chinas-talent-recruitment-plans.

Date: Tuesday, Sept. 24, 2019.

Time: 4:00 p.m.

Location: 2318 Rayburn House Office Building.

Hearing: Artificial Intelligence and the Future of Work.

Committee: House Committee on Science, Space, and Technology; Subcommittee on Research and Technology.

Witnesses: Arthur Lupia, Assistant Director, Directorate for Social, Behavioral and Economic Sciences, NSF; Erik Brynjolfsson, Schussel Family Professor of Management Science and Director, The MIT Initiative on the Digital Economy, Massachusetts Institute of Technology; Rebekah Kowalski, Vice President, Manufacturing Services, ManpowerGroup; Sue Ellspermann, President, Ivy Tech Community College.

Webcast: https://science.house.gov/hearings?ID=8F55D718-BA71-4771-A28F-903B3F168498.

Date: Sept. 19, 2019.

Time: 10:00 a.m.

Location: H-309 Capitol.

Hearing: Science, Technology, Engineering, and Mathematics (STEM) Engagement.

Committee: House Committee on Appropriations; Subcommittee on Commerce, Science, Justice, and Related Agencies.

Witnesses: Michael Kincaid, Associate Administrator for STEM Engagement, NASA; Karen Marrongelle, Assistant Director for Education and Human Resources, NSF.

Webcast: https://www.congress.gov/event/116th-congress/house-event/109933

Date: Thursday, May 16, 2019.

Time: 10:00 a.m.

Location: 2318 Rayburn House Office Building.

Hearing: Event Horizon Telescope: The Black Hole Seen Round The World.

Committee: House Committee on Science, Space and Technology; Subcommittee on Research and Technology.

Witnesses: France Córdova, Director, NSF; Sheperd Doeleman, Director, Event Horizon Telescope; Center for Astrophysics, Harvard and Smithsonian; Colin Lonsdale, Director, MIT Haystack Observatory; Katherine Bouman, Postdoctoral Fellow, Center for Astrophysics, Harvard and Smithsonian.

Webcast: https://science.house.gov/hearings?ID=4790768C-C620-498C-A2C7-C372B101426C.

Date: Wednesday, May 8, 2019.

Time: 10:00 a.m.

Location: 2318 Rayburn House Office Building.

Hearing: A Review of the National Science Foundation FY 2020 Budget Request.

Committee: House Committee on Science, Space, and Technology; Subcommittee on Research and Technology.

Witnesses: France Córdova, Director, NSF; Diane Souvaine, Chair, National Science Board.

Webcast: https://science.house.gov/hearings?ID=CB747AB9-2DA7-4C66-8003-91FA0944387B.

Date: Tuesday, March 26, 2019.

Time: 9:30 a.m.

Location: H-309 Capitol.

Hearing: National Science Foundation's Budget Request for Fiscal Year 2020.

Committee: House Committee on Appropriations; Subcommittee on Commerce, Justice, Science, and Related Agencies.

Witness: France Córdova, Director, NSF.

Webcast: https://democrats-appropriations.house.gov/legislation/hearings/national-science-foundation-s-budget-request-for-fiscal-year-2020.

Additional resources

Budget, performance and financial reporting

Learn how NSF works to ensure funds are used effectively to support the agency's mission and strategic goals.

COVID-19 funding updates

View NSF's funding reports for the "American Rescue Plan Act of 2021" and the "Coronavirus Aid, Relief and Economic Security Act."

Academic technology transfer and commercialization

View a list of webpages describing the technology transfer and commercialization efforts at institutions of higher education.

Responses to congressional wastebooks

View NSF's responses to congressional wastebook reports.

Contact us

Email: Congressionalteam@nsf.gov

Phone: 703-292-8070