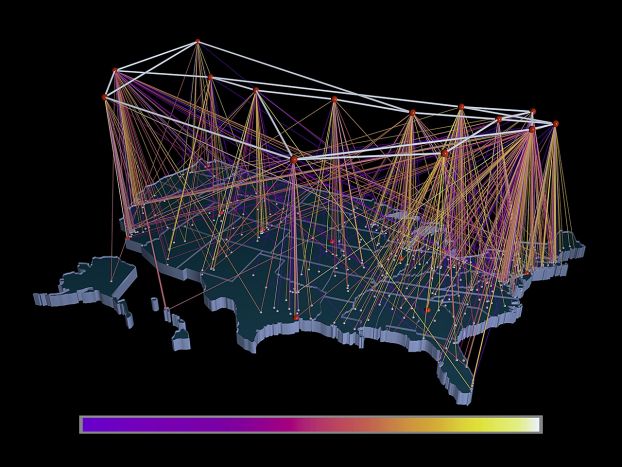

Credit: Dan Murphy

ARPANET: Paving the way

Between 1962 and 1963, Joseph Carl Robnett Licklider wrote a series of memos detailing the earliest ideas and challenges behind establishing an "Intergalactic Computer Network" where anyone could quickly access data and information on every subject imaginable. This would ultimately lead to the creation of the internet's direct precursor — the Advanced Research Projects Agency Network (ARPANET) — run by the Defense Advanced Research Projects Agency (DARPA).

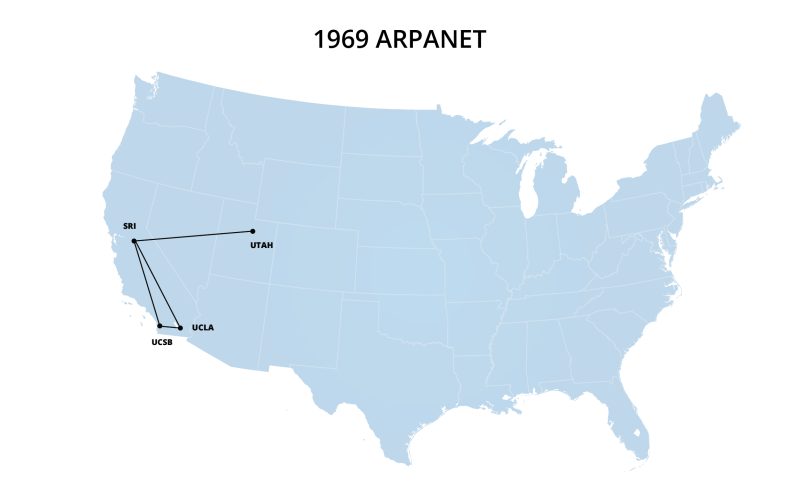

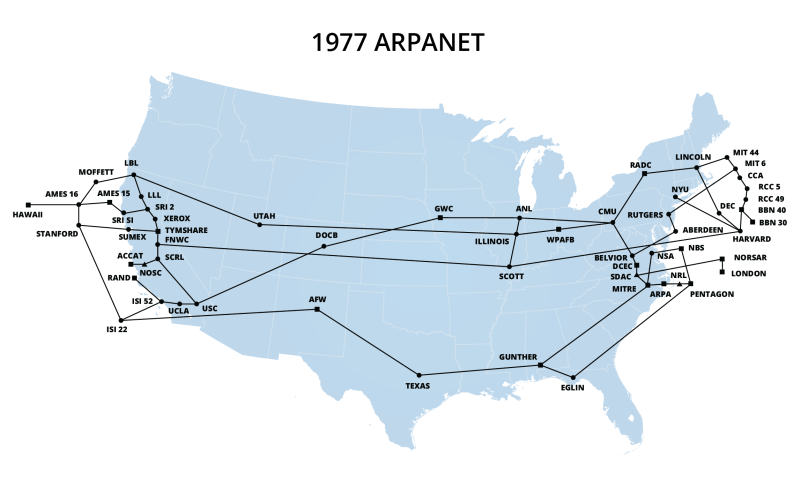

In its earliest form, ARPANET began with four computer nodes in late 1969. Over the next two decades, DARPA-funded researchers expanded ARPANET and designed internet protocols — like transmission control protocol/internet protocol (TCP/IP) — allowing computers to transmit and receive data such as email, computer-to-computer file transfer and remote login.

NSFNET: The backbone of the early internet

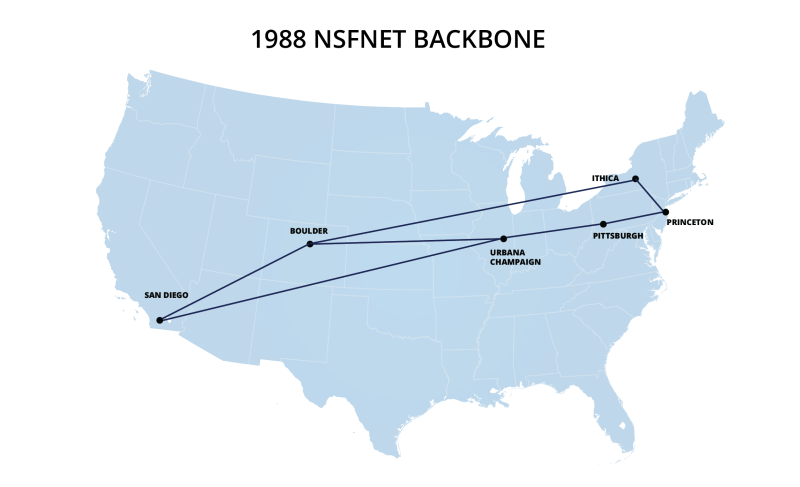

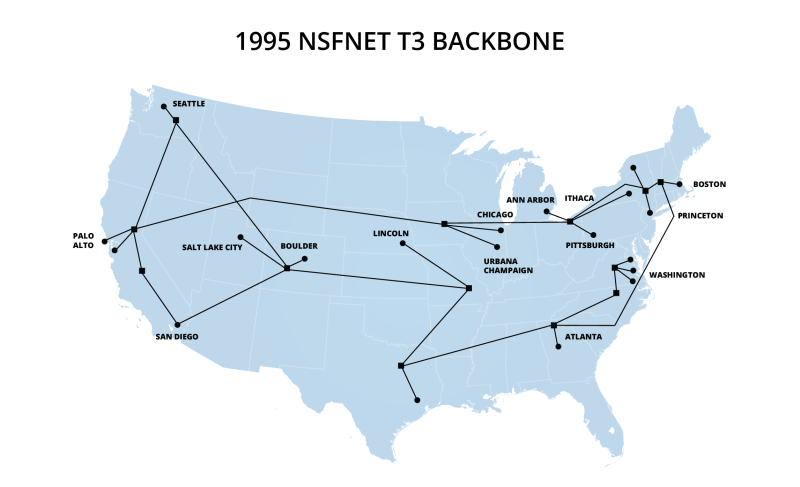

One of the most significant TCP/IP-based networks was NSFNET, launched in 1986 by NSF to connect academic researchers to a new system of supercomputer centers.

As the first network available to every researcher, NSFNET became the de facto U.S. internet backbone, connecting around 2,000 computers in 1986 and expanding to more than 2 million by 1993.

NSFNET laid the foundation for the internet's explosive worldwide growth in the 1990s.

Credit: Donna Cox and Robert Patterson / National Center for Supercomputing Applications and the Board of Trustees of the University of Illinois

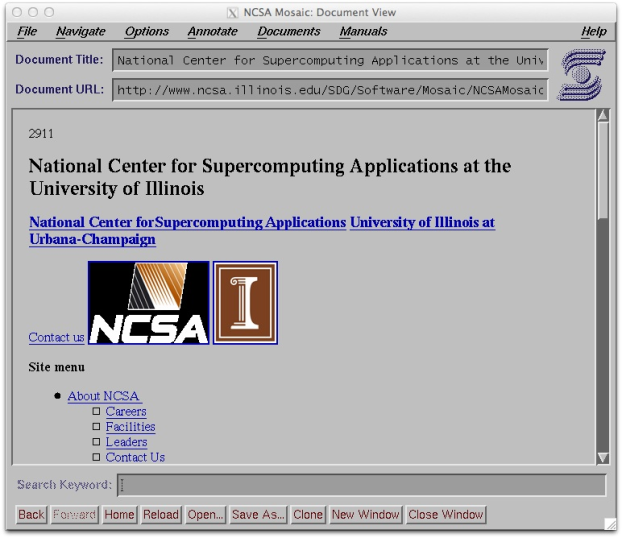

Credit: Charles Severance

Going public

Commercial firms noted the popularity and effectiveness of the growing internet and began to build their own network infrastructure, eventually producing products that provided basic connectivity and internet services.

During the 1990s, NSF helped shape the growth and operation of the modern internet, funding the development of the world's first freely available web browser — Mosaic — and algorithms to reduce internet congestion.

The rapid expansion of commercial internet services prompted NSF to shut down its dedicated infrastructure backbone in 1995.

The Mosaic web browser allowed browsers to view webpages that included both graphics and text, spurring a revolution in communications, business, education and entertainment that has had a trillion-dollar impact on the global economy.

Mosaic was developed out of NSF-funded research at the National Center for Supercomputing Applications at the University of Illinois Urbana-Champaign.

NSF after NSFNET

The decommissioning of NSFNET and privatization of the internet did not mark the end of NSF's involvement in networking.

NSF continues to support many research projects to develop new networking tools, online educational resources and network-based applications.

Over the years, NSF has also been instrumental in providing international connection services that have bridged the U.S. network infrastructure with countries and regions including Europe, Mongolia, Africa, Latin America, Russia and the Pacific Rim.

Credit: Courtesy of Duolingo