NSF M3X: Integrating human expertise and AI to transform health care

Artificial intelligence, machine learning and advanced robotics are dramatically transforming society, and health care is one of the areas where these technologies could solve many of the issues facing the field today. The integration of AI and machine learning with physical processes can create systems that interact with their environment and offer the potential to have a positive impact on millions of lives.

These intelligent engineered systems collect data, analyze it to make informed decisions, and take actions that enhance safety, efficiency and well-being. But as human-machine interactions become more integrated into health care — such as helping stroke victims recover using adaptive wearable technology, better understanding of how visually impaired people navigate environments using virtual reality or adjusting the precise movements of surgical equipment during operations — it is critical to build trust in the technology for both doctors and patients.

The U.S. National Science Foundation Mind, Machine and Motor Nexus program (NSF M3X) supports research that improves understanding of interactions between human and "synthetic actors" in a broad range of settings to enhance and ensure safety, productivity and well-being. This research is advancing understanding of how best to use AI and robotics to save and improve lives.

Helping the blind navigate

For people with visual impairments, relying on audio and tactile clues to move through their surroundings can be difficult in dense urban environments, and this challenge is compounded in emergencies. At New York University, researchers are studying how visually impaired people navigate crowded surroundings safely using virtual reality simulations.

Participants navigate through three different urban scenarios designed to reflect real-world challenges for people with visual impairments: crossing a busy street while avoiding pedestrians and vehicles, reaching a designated location in a crowded shopping plaza, and evacuating a building during an emergency. The participants receive audio feedback, such as footsteps, traffic sounds and emergency alarms, as well as tactile feedback from a device worn around the abdomen to indicate collisions.

The researchers also plan to work with glaucoma patients, drawing on longstanding collaborations with visually impaired people from previous studies. The team's work has the potential to improve nonvisual feedback provided to the visually impaired in real life and could even lead to new assistive technology used during emergencies.

At-home balance training

Whether due to aging, physical incapacitation or sensory impairment, losing the ability to balance leads to a more sedentary lifestyle and can increase a person's chance of injury. By 2050, the U.S. population over 65 is expected to more than double, making the need for effective balance training even more important. Clinic-based physical therapy can be costly and may not even be a viable option. At-home training can help, but without expert feedback, effectiveness is limited.

Researchers at the University of Michigan are using machine learning models trained on examples of physical therapy evaluations to develop a wearable device that brings the expertise of a physical therapist to at-home care. The system mirrors doctor-patient interactions by gathering data on both patient movement and therapist eye movement. To date, data-driven models can accurately predict balance ratings with nearly 90% accuracy, within a point of expert ratings on the same scale.

The team found that using four wearable sensors — on the left and right thighs, lower back and left wrist — provided the best balance assessment results. Patients modify their movement according to evaluations, and therapists adjust their improvement plans based on patient performance. This allows the device to provide individualized feedback for its wearer. The team also sees potential breakthroughs in walking rehabilitation.

Upper limb prostheses have advanced considerably, including the development of motorized hands and fingers that can move much like natural limbs. While these are remarkable, they cannot provide a sense of touch and pressure, which is essential for everyday tasks such as holding an egg without cracking it or picking up a glass of water.

A multidisciplinary team of engineers, scientists and physicians at Johns Hopkins University is developing a prosthesis that provides haptic feedback to allow more delicate tasks by taking into account user intention and environmental cues. Beyond restoring "touch" to amputees, this research could lead to other new assistive technologies.

One of the main focuses is designing a robotic hand that allows the user to mimic the fluid, automatic movements of natural limbs. To do this, the team designed a prosthesis based on the tendon movements of real hands and developed a device that responds to and provides feedback to the user rather than the environment.

Individualized teleoperations

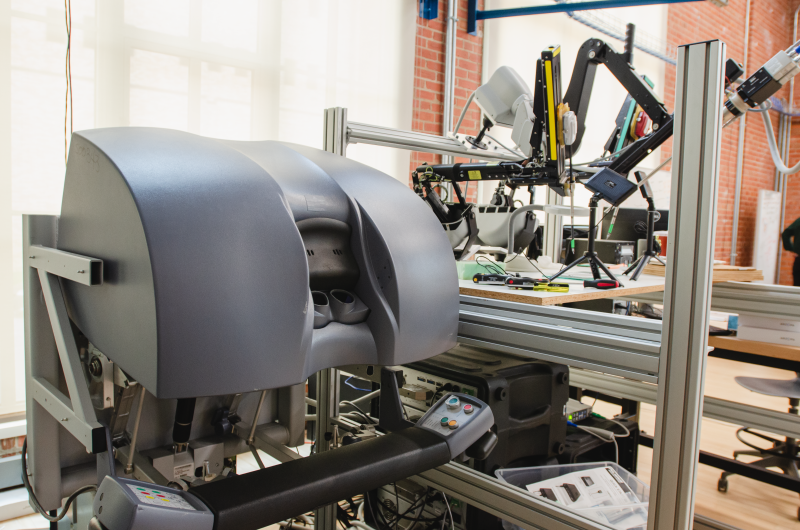

Smooth collaboration between humans and machines is critical during remote surgery, when doctors may not even be in the same room as their patient. Ann Majewicz Fey at The University of Texas at Austin is working to create new models that allow surgical robotic arms to better respond to — and possibly augment — human inputs based on the user's intent, movement style and level of expertise.

To develop a surgical tool that can effectively augment a doctor's performance, researchers first need to understand the movements of the surgeon. "With robotic surgery, there's a lot of wrist rotation that's important, so we put EMG trackers on what's called the pronator teres, which is a muscle that controls your wrist rotation. We looked at the extensors and flexors for the wrists. We even looked at feet because there are some pedals that you have to control," Fey said.

Based on this data, the team has started testing methods for providing feedback to trainees in real time and has demonstrated significant improvement in trainees completing a peg transfer task, a common surgical training task. More recently, her team has been developing new methods to provide feedback to surgeons as efficiently as possible using wearable wristbands.

Training for endovascular surgery

During surgery, patients depend on the speed, precision and experience of their doctor. New NSF-supported technologies are helping to ensure surgeons are well-trained while also enhancing their abilities in the operating room.

In endovascular surgery, catheters are threaded into arteries to perform operations within blood vessels. These minimally invasive procedures allow doctors to treat cardiovascular disease, carotid arteries and aneurysms with less risk to the patient, but the procedures require smooth, precise movements. To help surgeons train, researchers at Rice University are combining virtual reality simulations with a vibrating wristband to test how vibrations in response to either jerky movements or inaccuracy affect performance.

One finding from their work is that there is a clear benefit for surgeons to train for accuracy first and then work on improving their speed. Two different groups of surgeons were studied. One trained for speed, then accuracy, while the other trained in accuracy first and then speed. The researchers found that after switching goals, the second group lost all of their gains in speed and smoothness, while the group that trained in speedy, fluid movement first saw only a minor drop in skill and far higher overall performance.